Neuralink's first human trial – Cause for celebration or worry?

On the benefits and risks brain-computer interface technologies pose and how we should move forward

On Tuesday, Elon Musk announced that his company Neuralink successfully implanted their first wireless brain chip - an innovative brain computer interface - into a human.1

This is super exciting but arguably also scary. In this post, I want to share some thoughts on the benefits and risks of Neuralink, and brain computer interface technologies (BCIs) more broadly. Whilst some of the risks seem very futuristic, and conditional on a technology that may never become feasible, I think it’s important to keep the bigger picture in mind. Especially because Elon Musks’ self-declared goal is to enable humans to “merge” with Artificial Intelligence (AI), in order to protect us from losing control to increasingly advanced AI.2

1. How Neuralink and other BCIs works

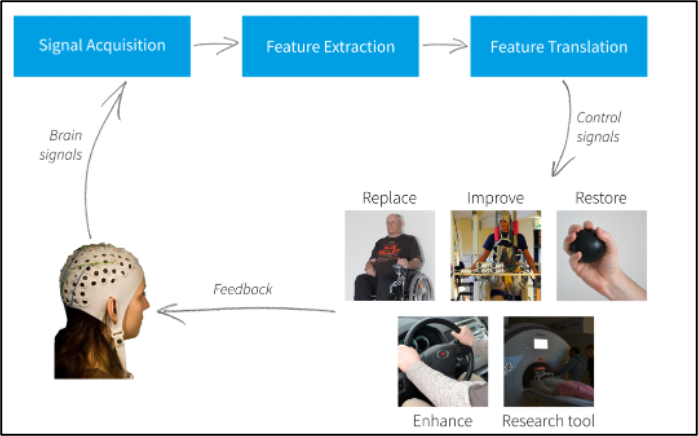

BCI’s are computer-based systems that allow for direct communication between the brain and an external device such as a computer.3

The following image gives a simplified depiction of how a BCI works: The BCI acquires brain signals, analyzes them and translates them into commands that get sent to the computer.4 The artificial output “replaces, restores, enhances, supplements, or improves natural {Central Nervous System} CNS output and thereby changes the ongoing interactions between the CNS and its external or internal environment”.5

Neuralink is an innovative BCI as it involves implanting “neural threads” and a “link” directly into the skull. The neural threads contain electrodes for detecting neural electrical signals which can get processed, stimulated and transmitted by the link processes. The implantation will allow brain data to be measured more accurately than with previous BCI’s and to travel wirelessly to ones’ digital devices.6

Below is an image of what getting the link would look like. The procedure is aimed to be carried out by a surgical robot.

2. Benefits of Neuralink, other BCIs and Human-AI merger

Firstly, Neuralink’s most imminent goal is to help people with severe disabilities such as quadriplegia. Since BCIs such as Neuralink function through thought alone, people who cannot speak or move can “regain independence through the control of computers and devices”.7 According to Musk, implanting artificial neural threads with minimal invasion, could solve almost any health problem: The threads can help people regain eyesight, hearing, full body movement and cure brain illness. Whilst these claims are still highly speculative, Neuralink’s ability to replace potentially any missing neural connections, does give us a strong reason for hope.8

Secondly, Neuralink and other BCI’s could help neuroscientists better understand how the brain works. It gives them unique access to real-time brain data allowing them to see which brain parts are active during which activities. Moreover, if people communicate directly with their phones and computers, clearer insights into the human psyche can be obtained. Access to brain data seems especially useful if we want to understand the yet unexplainable phenomena of human consciousness. As Susan Schneider has argued in “Artificial You”, a better understanding of consciousness is vital for making informed decisions on the desirability of human-AI merger.9 This is because once we know where consciousness emanates from, we can better understand whether merging with AI would pose a threat to consciousness. It might also then be possible to design human-AI merger in a way that protects consciousness.

Thirdly, once Neuralink (or other BCIs) are sufficiently advanced they may be able to record and process our thoughts. This could allow us to save and replay memories. Should these technologies furthermore be implanted into a sufficient number of people, society may be able to communicate directly via thoughts. This could lead to a strengthening of human connections, given that language is, according to Musk, a much less efficient and authentic form of communication. Telepathy could for example allow us to communicate “inexpressible” feelings, or effortlessly share imaginative images and ideas. Furthermore, trust between humans could increase as it may be harder to lie to people using telepathy. Also, if the courts were granted access to our thoughts in special circumstances, this could enable making “perfect” judgements (i.e. punishing only the guilty).

Fourthly, and for Musk most importantly, in the long term, Neuralink may enable humans to merge with AI. For transhumanists such as Musk, merging with AI seems to be the only way for humans to remain in control upon the existence of AGI. Moreover, as stated in the Transhumanist Declaration, a human-AI merger has the potential to significantly upgrade the human experience by leading to superintelligence and radical longevity. Transhumanists “assert the desirability of transcending human limitations by overcoming aging, enhancing cognition, abolishing involuntary suffering, and expanding beyond Earth”.10

3. Risks of Neuralink, BCIs and human-AI merger more broadly

Whilst the goals outlined above may seem utopic, as argued below, Neuralink and human-AI merger pose serious risks. Indeed, there is the danger the entity who has joined “them” is no longer human at all, meaning that the attempt to “upgrade” humanity could lead to its permanent destruction.

Firstly, there are critical privacy and safety concerns for the individual, due to the danger of hacking and the risk of technology bugs. If the Neuralink is connected to parts of the brain responsible for thoughts and feelings, a hacker might get access to these. The danger that others might access our thoughts is deeply unsettling. Privacy of thoughts is central to our feeling of autonomy and individuality. Moreover, once someone is able to access our thoughts and emotions, who is to say they can’t manipulate them? Hackers may be able to insert technical bugs into our brain chips, changing the way we think, feel, and move. Neuralink seems seriously concerned about security, stating they are building it “into every layer of the product, using strong cryptography, defensive engineering and extensive security auditing”.11 Whilst clearly commendable, security experts agree that almost all our devices can be hacked.12 If Neuralink gets authorised, it should then be up to the individual to decide if the technology is safe enough for them. And the burden of proof to show that must be on Neuralink. A risk, however, is that the individual may have no genuine choice. If Neuralink advances into a wide-spread technology that gives us instant access to all the knowledge of the internet, or that allows telepathy, individuals refraining from using it will be left behind. The disadvantages they may face in the labor market might coerce otherwise unwilling people to adopt the brain-chips – at the cost of the privacy and integrity of their thoughts and feelings.

Secondly, there also political dangers associated with advanced uses of Neuralink and other BCI technologies. If sufficiently advanced, they could enable mass-surveillance by governments. Currently, even the most authoritarian states don’t have direct access to our thoughts. Freedom of thought is the only freedom left to people living in such systems. Punishing citizens who merely think critically of the regime would enable a new reign of even more brutal totalitarianism. Furthermore, BCI’s could lead to more deadly warfare. Advanced human machine pairings will provide a competitive advantage.13 Whilst this can be employed to minimize casualties, it can also be used to maximize damage by attacking critical infrastructure or specifically targeting a minority.

Finally, looking at human-AI merger more broadly, three key risks for humanity as a whole should be flagged. The first risk is that humans won’t properly handle the huge increase in intelligence and power granted to them. As Yuval Noah Harari provocatively asked in Sapiens: “Is there anything more dangerous than dissatisfied and irresponsible gods who don’t know what they want?”.14 Moreover, if only a small minority of current humans have the means to become continuously self-improving “gods”, this will create unprecedented inequality – inequality on a biological level which the “left-behind” might never catch up with. A second risk is that whilst the goal of merging with AI is for humans to retain control, whether this will be the case is very hard to predict. For this to happen it seems that the AI must be aligned with human values. AI alignment is, however, one of the biggest challenges in AI safety. The third risk is that we cannot tell for sure whether the merged human-AI will remain a conscious human at all. This point is stressed by Susan Schneider in “Artificial You”: We do not know what makes us conscious. If we replace the parts of the brain responsible for consciousness with non-consciousness generating brain chips, we would turn ourselves into AI zombies.15

Conclusion and next steps

For all the reasons outlined above, Neuralink, other BCI’s and any attempt of human-AI merger ought only to be adopted with utmost caution. Whilst Neuralink’s benefits in my opinion outweigh its’ risks when it comes to treating severe disabilities and neurological disorders, other uses appear too dangerous to promote at this point. This final section will outline next steps we as a society should arguably take to minimize the risks.

Firstly, it is critical for us to gain a better understanding of the risks raised above. Would an advanced BCI allow mind-reading? How is consciousness generated? How can we ensure AI is aligned? What human values should AI align with? These are all questions we should be confident in answering before gambling with humanities future.

Secondly, greater investments should be made into information security and AI safety systems. The burden of proof to show that Neuralink and other BCI’s are safe to use must lie on the producers.

Thirdly, personal information laws ought to be strengthened. Currently, enforcement of data protection is very weak. Before even more sensitive information such as ones’ thoughts are uploaded on devices or the internet, we must have greater confidence in the efficacy of our protection mechanisms. This goes hand in hand with a need to protect ones’ neuro-data. The Neurorights Foundation pushed for the strengthening of human rights through the granting of five neuro-rights. These include the right to mental privacy, free will, personal identity, fair access to mental augmentation, and protection from bias.16 International consensus and effective enforcement of such neuro-rights would be highly desirable before allowing wide-spread adoption of “mind-reading” technologies.

Finally, we need to have an open discussion about the benefits and draw-backs of a potential human-AI merger and what we would want such a merger to look like. This is difficult given that no one yet knows what is technically feasible or when a merger would become technologically and financially viable. Moreover, when publicizing sensitive information there is a risk of it getting into the wrong hands. Nonetheless, whether humans should “join them” should not be dictated by a few companies but decided collectively. For, as Winston Churchill said: “democracy is the worst form of government – except for all the others that have been tried”.17

Thank you for reading and all the best

‘Elon Musk Says Neuralink Implanted Wireless Brain Chip’ BBC News (30 January 2024) <https://www.bbc.co.uk/news/technology-68137046#:~:text=Tech%20billionaire%20Elon%20Musk%20has> accessed 31 January 2024.

“If You Can’t Beat 'Em, Join 'Em — Elon Musk Tweets out the Mission Statement for His AI-Brain-Chip Neuralink” (Business Insider) <https://www.businessinsider.in/tech/news/elon-musk-neuralink-mission-statement-for-artificial-intelligence-brain-chip/articleshow/76871062.cms>.

Andrea Kübler, “Welcome” (id.elsevier.com2009) <https://www.sciencedirect.com/topics/neuroscience/brain-computer-interface/pdf> accessed March 24, 2022.

Jerry J Shih, Dean J Krusienski and Jonathan R Wolpaw, “Brain-Computer Interfaces in Medicine” (2012) 87 Mayo Clinic Proceedings 268 <https://www.ncbi.nlm.nih.gov/pmc/articles/PMC3497935/> accessed March 24, 2022.

Jonathan Wolpaw and Elizabeth Wolpaw, Brain-Computer Interfaces: Principles and Practice (Jonathan Wolpaw and Elizabeth Winter Wolpaw eds, Oxford University Press 2012) <https://global.oup.com/academic/product/brain-computer-interfaces-9780195388855?cc=gb&lang=en&>.

Neuralink, “Neuralink” (neuralink.com2021) <https://neuralink.com/approach/> accessed March 24, 2022.

Neuralink, “Neuralink” (neuralink.com2021) <https://neuralink.com/applications/> accessed March 24, 2022.

“Neuralink: Merging Humans with AI” (www.youtube.comJune 11, 2020) accessed March 24, 2022.

Susan Schneider, Artificial You: Ai and the Future of Your Mind. (Princeton University Pres 2019).

David Wood, “Transhumanist Declarations” (London FuturistsOctober 14, 2013) <https://londonfuturists.com/2013/10/14/transhumanist-decalarations/> accessed March 24, 2022.

Neuralink, “Neuralink” (neuralink.com2021) <https://neuralink.com/applications/> accessed March 24, 2022.

Steve Goodbarn, “Keeping Computers Secure Is Becoming a Nearly Impossible Task” (Business InsiderNovember 4, 2017) <https://www.businessinsider.com/equifax-breach-keeping-computers-secure-is-becoming-a-nearly-impossible-task-2017-11> accessed March 29, 2022.

Anika Binnendijk, Timothy Marler and Elizabeth M Bartels, “Brain-Computer Interfaces: U.S. Military Applications and Implications, an Initial Assessment” [2020] www.rand.org <https://www.rand.org/pubs/research_reports/RR2996.html> accessed March 24, 2022.

Yuval Noah Harari, Sapiens: A Brief History of Humankind (Random House Uk 2014) 466.

Susan Schneider, Artificial You: Ai and the Future of Your Mind. (Princeton University Pres 2019).

“Mission” (The NeuroRights Foundation) <https://neurorightsfoundation.org/mission> accessed March 24, 2022.

Peter Millett, “The Worst Form of Government | Foreign, Commonwealth & Development Office Blogs” (Foreign, Commonwealth & Development OfficeMarch 5, 2014) <https://blogs.fcdo.gov.uk/petermillett/2014/03/05/the-worst-form-of-government/> accessed March 24, 2022.